Fiction Belongs on Your AI Reading List

Candice Bryant Consulting

Strategic Intelligence & Public Affairs

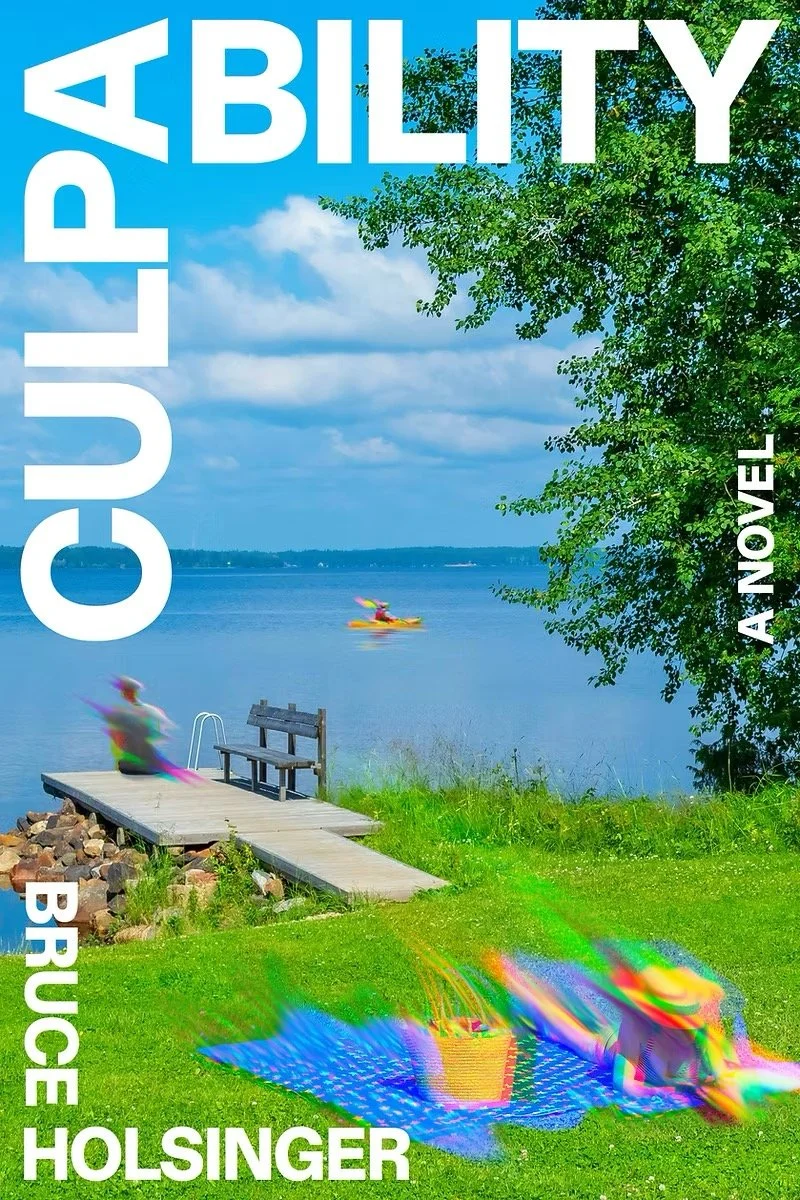

I recently finished Bruce Holsinger’s Culpability, a novel that makes the debate around AI ethics deeply personal. The story’s central dilemma: a family is in a fatal accident in their AI-powered self-driving car after the teenage son jerks the wheel. It also turns out the mother, Lorelei, designed the car’s algorithm. Holsinger shows how impossible it is to untangle fault when technology and human lives intersect.

While the book has many layers, it raises a crucial question for our own lives: what is our role in shaping AI’s impact?

The Weight of Responsibility

Lorelei’s work isn’t theoretical; it carries real stakes. She is driven by the powerful, logical argument for her technology:

“Over forty thousand people die in car accidents every year in this country… These autonomous systems could save hundreds of thousands of lives.”

Yet she also carries the immense personal burden of every potential failure:

“Every future death in a SensTrek car will be linked, at least in part, to Lorelei Shaw… she will forever imagine herself in the computational cockpit, the pilot of a mechanized slaughter always outweighed by the far greater number of lives saved by her work.”

Holsinger grounds this moral problem not in technical mechanics, but in its stark human consequence.

Another Way

Later, when offered a Pentagon role, Lorelei’s instinct is to accept—if not her, then who? But her husband, seeing the emotional toll this responsibility has already taken, helps her find another path. Writing, he realizes, can be a necessary outlet and just as impactful. He tells her:

“Most people…we’re idiots about this stuff. We have no idea what’s really going on with AI… You have a Ph.D. in ethical philosophy. So translate what you know into language someone like me can comprehend.”

Lorelei’s choice to write about AI, rather than build it, doesn’t diminish her impact. It affirms that meaningful contributions come in many forms. Engineers build, educators explain, policymakers regulate, and communicators clarify. Trust in AI isn’t built by a single act of invention—it’s nurtured by the ecosystem of people who shape how it is understood, deployed, and overseen.